70-534 | Microsoft 70-534 Free Practice Questions 2021

70 534 dumps are updated and exam ref 70 534 are verified by experts. Once you have completely prepared with our 70 534 ebook free download you will be ready for the real 70-534 exam without a problem. We have exam ref 70 534 architecting microsoft azure solutions. PASSED exam ref 70 534 pdf First attempt! Here What I Did.

Also have 70-534 free dumps questions for you:

NEW QUESTION 1

You are designing a Windows Azure application. The application includes two web roles and three instances of a worker role. The web roles will send requests to the worker role through one or more Windows Azure Queues. You have the following requirements:

-Ensure that each request is processed exactly one time.

-Minimize the idle time of each worker role instance.

-Maximize the reliability of request processing.

You need to recommend a queue design for sending requests to the worker role. What should you recommend?

- A. Create a queue for each combination of web roles and worker role instance

- B. Send requests to all worker role instances based on the sending web role.

- C. Create a single queu

- D. Send all requests on the single queue.

- E. Create a queue for each worker role instanc

- F. Send requests on each worker queue by using a round robin rotation.

- G. Create a queue for each web rol

- H. Send requests on all queues at the same time.

Answer: B

Explanation: To communicate with the worker role, a web role instance places messages on to a queue. A worker role instance polls the queue for new messages, retrieves them, and processes them. There are a couple of important things to know about the way the queue service works in Azure. First, you reference a queue by name, and multiple role instances can share a single queue. Second, there is no concept of a typed message; you construct a message from either a string or a byte array. An individual message can be no more than 64 kilobytes (KB) in size.

References:

https://msdn.microsoft.com/en-gb/library/ff803365.aspx

http://azure.microsoft.com/en-gb/documentation/articles/cloud-services-dotnet-multi-tier-app-using-service-bus-queues/

NEW QUESTION 2

HOTSPOT

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each

question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Background General

Trey Research is the global leader in analytical data collection and research. Trey Research houses its servers in a highly secure server environment. The company has continuous monitoring, surveillance, and support to prevent unauthorized access and data security.

The company uses advanced security measures including firewalls, security guards, and surveillance to ensure the continued service and protection of data from natural disaster, intruders, and disruptive events.

Trey Research has recently expanded its operations into the cloud by using Microsoft Azure. The company creates an Azure virtual network and a Virtual Machine (VM) for moving on-premises Subversion repositories to the cloud. Employees access Trey Research applications hosted on-premises and in the cloud by using credentials stored on- premises.

Applications

Trey Research hosts two mobile apps on Azure, DataViewer and DataManager. The company uses Azure-hosted web apps for internal and external users. Federated partners of Trey Research have a single sign-on (SSO) experience with the DataViewer application.

Architecture

You have an Azure Virtual Network (VNET) named TREYRESEARCH_VNET. The VNET

includes all hosted VMs. The virtual network includes a subnet Frontend and a subnet named RepoBackend. A resource group has been created to contain the TREYRESEARCH_VNET, DataManager and DataViewer. You manage VMs by using System Center VM Manager (SCVMM). Data for specific high security projects and clients are hosted on-premises. Data for other projects and clients are hosted in the cloud.

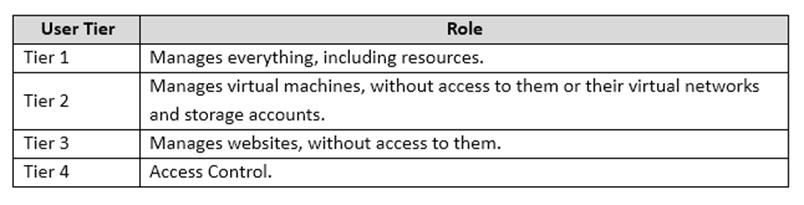

Azure Administration

DataManager

The DataManager app connects to a RESTful service. It allows users to retrieve, update, and delete Trey Research data.

Requirements General

You have the following general requirements:

Disaster recovery

Disaster recovery and business continuity plans must use a single, integrated service that supports the following features:

Security

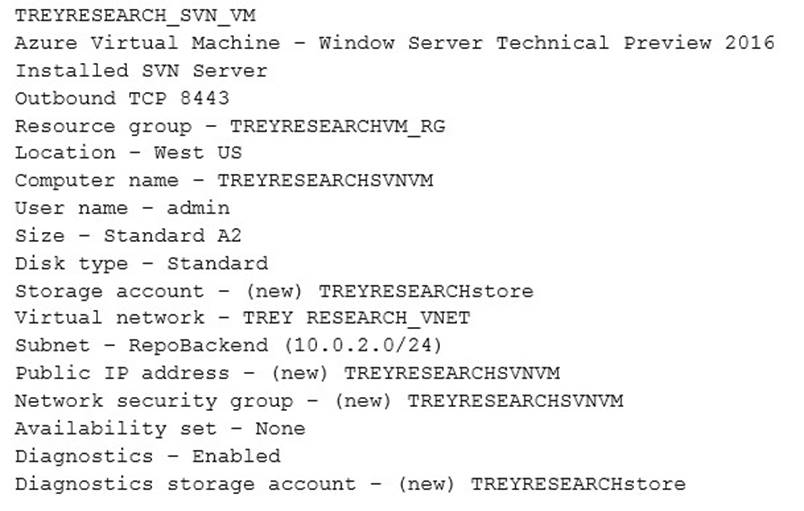

You identify the following security requirements: Subversion server

Subversion Server Sheet

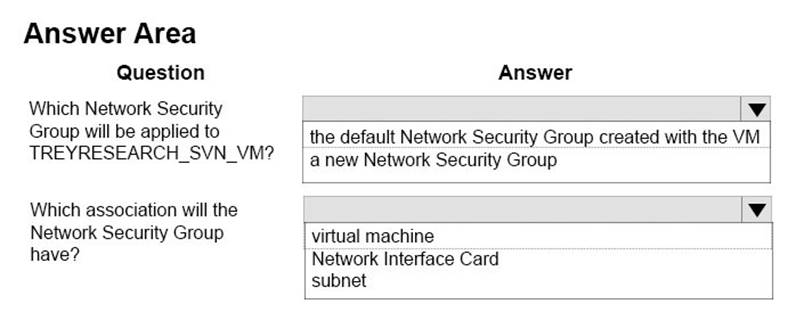

You need to enforce the security requirements for all subversion servers.

How should you configure network security? To answer, select the appropriate answer from each list in the answer area.

Answer:

Explanation: / You host multiple subversion (SVN) repositories in the RepoBackend subnet. The SVN servers on this subnet must use inbound and outbound TCP at port 8443.

NEW QUESTION 3

A company has a very large dataset that includes sensitive information. The dataset is over 30 TB in size.

You have a standard business-class ISP internet connection that is rated at 100 megabits/second.

You have 10 4-TB hard drives that are approved to work with the Azure Import/Export Service.

You need to migrate the dataset to Azure. The solution must meet the following requirements:

* The dataset must be transmitted securely to Azure.

* Network bandwidth must not increase.

* Hardware costs must be minimized.

What should you do?

- A. Prepare the drives with the Azure Import/Export tool and then create the import jo

- B. Ship the drives to Microsoft via a supported carrier service.

- C. Create an export job and then encrypt the data on the drives by using the Advanced Encryption Standard (AES). Create a destination Blob to store the export data.

- D. Create an import job and then encrypt the data on the drives by using the Advanced Encryption Standard (AES). Create a destination Blob to store the import data.

- E. Prepare the drives by using Sysprep.exe and then create the import jo

- F. Ship the drives to Microsoft via a supported carrier service.

Answer: A

Explanation: You can use the Microsoft Azure Import/Export service to transfer large amounts of file data to Azure Blob storage in situations where uploading over the network is prohibitively expensive or not feasible.

References: http://azure.microsoft.com/en-gb/documentation/articles/storage-import- export-service/

NEW QUESTION 4

HOTSPOT

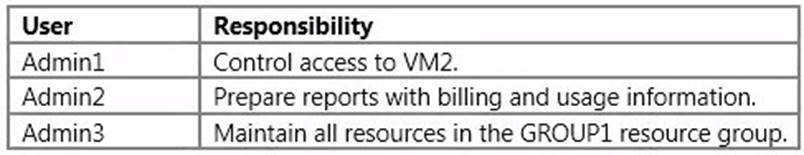

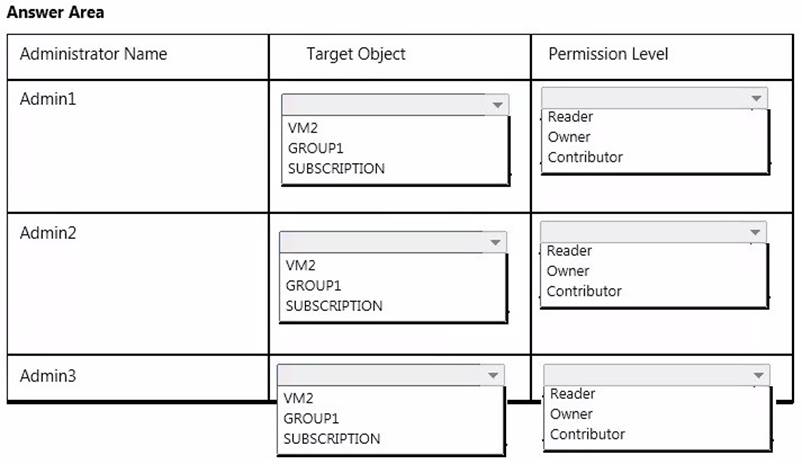

A company uses Azure for several virtual machine (VM) and website workloads. The company plans to assign administrative roles to a specific group of users. You have a resource group named GROUP1 and a virtual machine named VM2.

The users have the following responsibilities:

You need to assign the appropriate level of privileges to each of the administrators by using the principle of least privilege.

What should you do? To answer, select the appropriate target objects and permission levels in the answer area.

Answer:

Explanation: * Owner can manage everything, including access.

* Contributors can manage everything except access.

Note: Azure role-based access control allows you to grant appropriate access to Azure AD users, groups, and services, by assigning roles to them on a subscription or resource group or individual resource level.

References: http://azure.microsoft.com/en-us/documentation/articles/role-based-access- control-configure/

NEW QUESTION 5

You need to support loan processing for the WGBLoanMaster app. Which technology should you use?

- A. Azure Storage Queues

- B. Azure Service Fabric

- C. Azure Service Bus Queues

- D. Azure Event Hubs

Answer: D

NEW QUESTION 6

You need to support web and mobile application secure logons. Which technology should you use?

- A. Azure Active Directory B2B

- B. OAuth 1.0

- C. LDAP

- D. Azure Active Directory B2C

Answer: D

NEW QUESTION 7

HOTSPOT

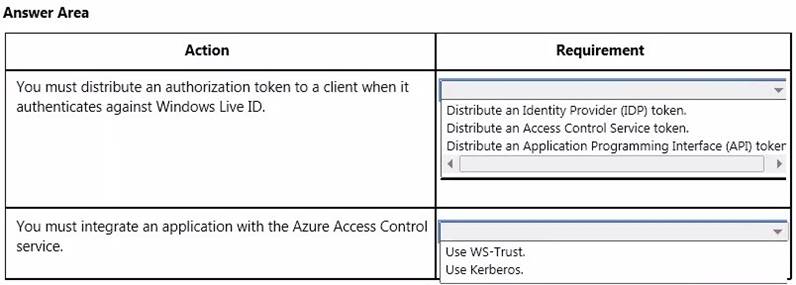

Resources must authenticate to an identity provider. You need to configure the Azure Access Control service.

What should you recommend? To answer, select the appropriate responses for each requirement in the answer area.

Answer:

Explanation: Box 1:

* Token – A user gains access to an RP application by presenting a valid token that was issued by an authority that the RP application trusts.

* Identity Provider (IP) – An authority that authenticates user identities and issues security

tokens, such as Microsoft account (Windows Live ID), Facebook, Google, Twitter, and Active Directory. When Azure Access Control (ACS) is configured to trust an IP, it accepts and validates the tokens that the IP issues. Because ACS can trust multiple IPs at the same time, when your application trusts ACS, you can your application can offer users the option to be authenticated by any of the IPs that ACS trusts on your behalf.

Box 2: WS-Trust is a web service (WS-*) specification and Organization for the Advancement of Structured Information Standards (OASIS) standard that deals with the issuing, renewing, and validating of security tokens, as well as with providing ways to establish, assess the presence of, and broker trust relationships between participants in a secure message exchange. Azure Access Control (ACS) supports WS-Trust 1.3.

Incorrect: ACS does not support Kerberos. References:

NEW QUESTION 8

DRAG DROP

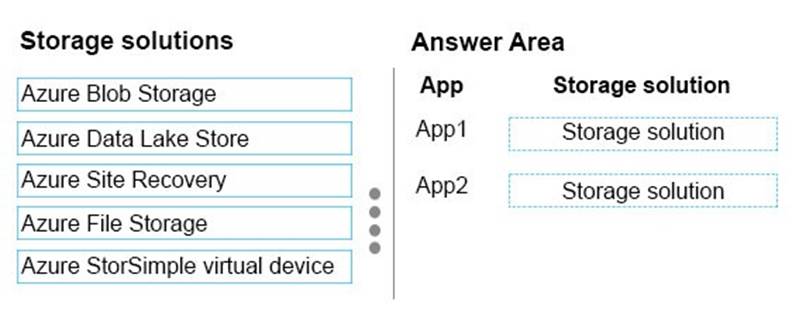

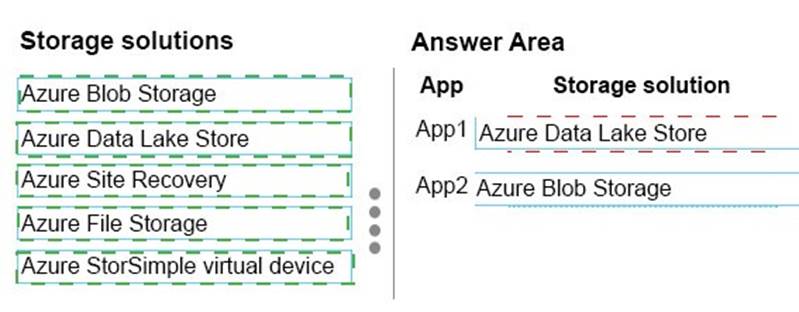

You are designing an Azure storage solution for a company. The company has the following storage requirements:

* An app named App1 uses data analytics on stored data. App1 must store data on hierarchical file system that uses Azure Active Directory (Azure AD) access control lists.

* An app named App2 must have access to object-based storage. The storage must support role-based access control and use shared access signature keys.

You need to design the storage solution.

Which storage solution should you use for each app? To answer, drag the appropriate storage solutions to the correct apps. Each storage solution may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 9

DRAG DROP

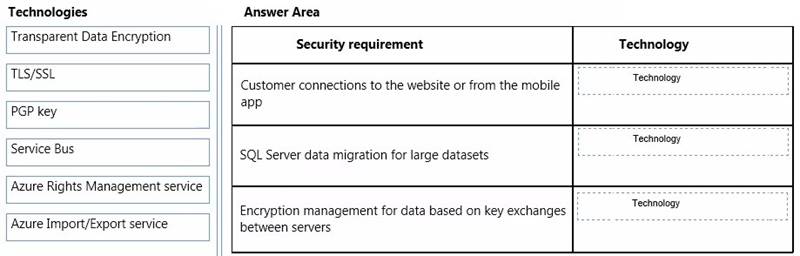

You need to ensure that customer data is secured both in transit and at rest.

Which technologies should you recommend? To answer, drag the appropriate technology to the correct security requirement. Each technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

Answer:

Explanation: * Azure Rights Management service

Azure Rights Management service uses encryption, identity, and authorization policies to help secure your files and email, and it works across multiple devices—phones, tablets, and PCs. Information can be protected both within your organization and outside your organization because that protection remains with the data, even when it leaves your

organization’s boundaries.

* Transparent Data Encryption

Transparent Data Encryption (often abbreviated to TDE) is a technology employed by both Microsoft and Oracle to encrypt database files. TDE offers encryption at file level. TDE solves the problem of protecting data at rest, encrypting databases both on the hard drive and consequently on backup media.

* TLS/SSL

Transport Layer Security (TLS) and its predecessor, Secure Sockets Layer (SSL), are cryptographic protocols designed to provide communications security over a computer network. They use X.509 certificates and hence asymmetric cryptography to authenticate the counterparty with whom they are communicating, and to negotiate a symmetric key.

References: https://technet.microsoft.com/en-us/library/jj585004.aspx http://en.wikipedia.org/wiki/Transparent_Data_Encryption http://en.wikipedia.org/wiki/Transport_Layer_Security

NEW QUESTION 10

You administer an Azure Storage account with a blob container. You enable Storage account logging for read, write and delete requests. You need to reduce the costs associated with storing the logs. What should you do?

- A. Execute Delete Blob requests over https.

- B. Create an export job for your container.

- C. Set up a retention policy.

- D. Execute Delete Blob requests over http.

Answer: C

Explanation: There are two ways to delete Storage Analytics data: by manually making deletion requests or by setting a data retention policy. Manual requests to delete Storage Analytics data are billable, but delete requests resulting from a retention policy are not billable.

References: https://docs.microsoft.com/en-us/rest/api/storageservices/Setting-a-Storage-Analytics-Data-Retention-Policy?redirectedfrom=MSDN

NEW QUESTION 11

You deploy an application as a cloud service in Azure. The application consists of five instances of a web role. You need to move the web role instances to a different subnet. Which file should you update?

- A. Service definition

- B. Diagnostics configuration

- C. Service configuration

- D. Network configuration

Answer: C

Explanation: The service configuration file specifies the number of role instances to deploy for each role in the service, the values of any configuration settings, and the thumbprints for any certificates associated with a role. If the service is part of a Virtual Network, configuration information for the network must be provided in the service configuration file, as well as in the virtual networking configuration file. The default extension for the service configuration file is .cscfg.

References: https://msdn.microsoft.com/en-us/library/azure/ee758710.aspx

NEW QUESTION 12

You manage a hybrid environment for a company. The company plans to manage the environment by using Microsoft System Center 2012 R2.

You need to deploy the correct component to enable management across the environment. Which component should you deploy?

- A. Windows Azure SQL Database Management Pack

- B. System Center Management Pack for Windows Azure Pack

- C. Cross Platform Audit Collection Services Management Pack

- D. System Center Management Pack for Windows Server Cluster

Answer: B

NEW QUESTION 13

HOTSPOT

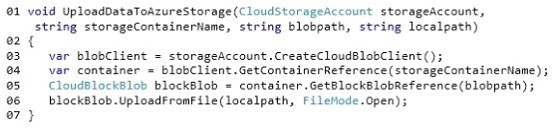

You have a WebJob object that runs as part of an Azure website. The WebJob object uses features from the Azure SDK for .NET.

You use a well-formed but invalid storage key to create the storage account that you pass into the UploadDataToAzureStorage method.

The WebJob object contains the following code segment. Line numbers are included for reference only.

Answer:

Explanation: For blob storage, there is a retry policy implemented by default, so if you do nothing, it will do what’s called exponential retries. It will fail, then wait a bit of time and try again; if it fails again, it will wait a little longer and try again, until it hits the maximum retry count.

References: https://www.simple-talk.com/cloud/platform-as-a-service/azure-blob-storage-part-3-using-the-storage-client-library/

NEW QUESTION 14

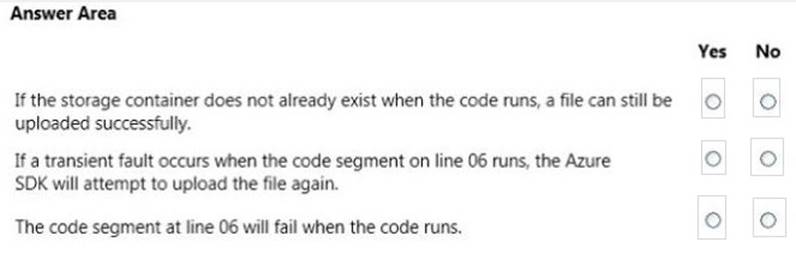

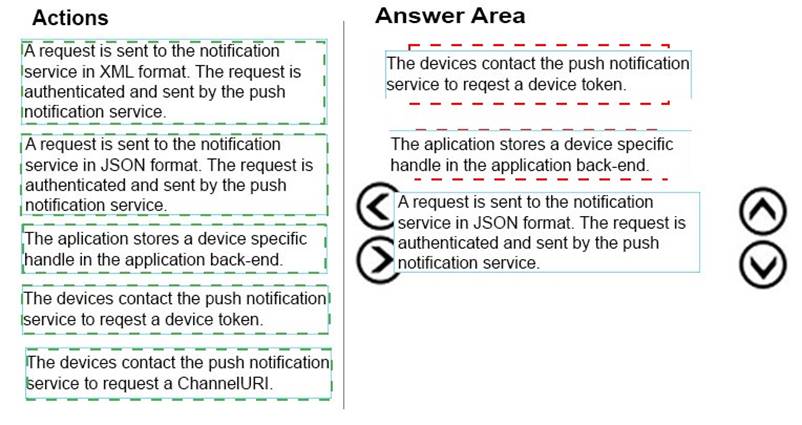

DRAG DROP

You are training a new developer.

You need to describe the process flow for sending a notification.

Which three actions must be performed in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

Explanation:

NEW QUESTION 15

You need to implement the security requirements. What should you implement?

- A. the GraphAPI to query the directory

- B. LDAP to query the directory

- C. single sign-on

- D. user certificates

Answer: C

Explanation: References:

https://blogs.msdn.microsoft.com/plankytronixx/2010/11/27/single-sign-on-between-on-premise-apps-windows-azure-apps-and-office-365-services/

NEW QUESTION 16

You are designing a Windows Azure application that will use Windows Azure Table storage. The application will allow teams of users to collaborate on projects. Each user is a member of only one team. You have the following requirements:

-Ensure that each user can efficiently query records related to his or her team's projects.

-Minimize data access latency.

You need to recommend an approach for partitioning table storage entities. What should you recommend?

- A. Partition by user

- B. Partition by team

- C. Partition by project

- D. Partition by the current date

Answer: B

Explanation: Partitions represent a collection of entities with the same PartitionKey values. Partitions are always served from one partition server and each partition server can serve one or more

partitions. A partition server has a rate limit of the number of entities it can serve from one partition over time.

References: https://docs.microsoft.com/en-us/rest/api/storageservices/Designing-a-Scalable-Partitioning-Strategy-for-Azure-Table-Storage?redirectedfrom=MSDN

NEW QUESTION 17

You need to recommend an appropriate solution for the data mining requirements. Which solution should you recommend?

- A. Design a schedule process that allocates tasks to multiple virtual machines, and use the Azure Portal to create new VMs as needed.

- B. Use Azure HPC Scheduler Tools to schedule jobs and automate scaling of virtual machines.

- C. Use Traffic Manager to allocate tasks to multiple virtual machines, and use the Azure Portal to spin up new virtual machines as needed.

- D. Use Windows Server HPC Pack on-premises to schedule jobs and automate scaling of virtual machines in Azure.

Answer: B

Explanation: * Scenario: Virtual machines:

✑ The data mining solution must support the use of hundreds to thousands of

processing cores.

✑ Minimize the number of virtual machines by using more powerful virtual machines.

Each virtual machine must always have eight or more processor cores available.

✑ Allow the number of processor cores dedicated to an analysis to grow and shrink

automatically based on the demand of the analysis.

✑ Virtual machines must use remote memory direct access to improve performance. Task scheduling:

The solution must automatically schedule jobs. The scheduler must distribute the jobs based on the demand and available resources.

NEW QUESTION 18

You manage a cloud service that utilizes an Azure Service Bus queue. You need to ensure that messages that are never consumed are retained. What should you do?

- A. Check the MOVE TO THE DEAD-LETTER SUBQUEUE option for Expired Messages in the Azure Portal.

- B. From the Azure Management Portal, create a new queue and name it Dead-Letter.

- C. Execute the Set-AzureServiceBus PowerShell cmdlet.

- D. Execute the New-AzureSchedulerStorageQueueJob PowerShell cmdlet.

Answer: A

Explanation: Deadlettering – From time to time a message may arrive in your queue that just can’t be processed. Each time the message is retrieved for processing the consumer throws an exception and cannot process the message. These are often referred to as poisonous messages and can happen for a variety of reasons, such as a corrupted payload, a message containing an unknown payload inadvertently delivered to a wrong queue, etc. When this happens, you do not want your system to come to grinding to a halt simply because one of the messages can’t be processed.

Ideally the message will be set aside to be reviewed later and processing can continue on to other messages in the queue. This process is called ‘Deadlettering’ a message and the

Service Bus Brokered Messaging supports dead lettering by default. If a message fails to be processed and appears back on the queue ten times it will be placed into a dead letter queue. You can control the number of failures it takes for a message to be dead lettered by setting the MaxDeliveryCount property on the queue. When a message is deadlettered it is actually placed on a sub queue which can be accessed just like any other Service Bus queue. In the example used above the dead letter queue path would be samplequeue/$DeadLetterQueue. By default a message will be moved to the dead letter queue if it fails delivery more than 10 times.

Automatic dead lettering does not occur in the ReceiveAndDelete mode as the message has already been removed from the queue.

References: https://www.simple-talk.com/cloud/cloud-data/an-introduction-to-windows-azure-service-bus-brokered-messaging/

NEW QUESTION 19

You administer an application that has an Azure Active Directory (Azure AD) B2C tenant named contosob2c.onmicrosoft.com.

Users must be able to use their existing Facebook account to sign in to the application. You need to register Facebook with your Azure AD B2C tenant.

What should you do?

- A. a sign-up policy

- B. a custom user attribute

- C. a local account

- D. a built-in user attribute

- E. an identity provider

Answer: E

NEW QUESTION 20

You need to configure availability for the virtual machines that the company is migrating to Azure.

What should you implement?

- A. Traffic Manager

- B. Availability Sets

- C. Virtual Machine Autoscaling

- D. Cloud Services

Answer: D

Explanation: Scenario: VanArsdel plans to migrate several virtual machine (VM) workloads into Azure.

Topic 2, Trey ResearchBackground

Overview

Trey Research conducts agricultural research and sells the results to the agriculture and food industries. The company uses a combination of on-premises and third-party server clusters to meet its storage needs. Trey Research has seasonal demands on its services, with up to 50 percent drops in data capacity and bandwidth demand during low-demand periods. They plan to host their websites in an agile, cloud environment where the company can deploy and remove its websites based on its business requirements rather than the requirements of the hosting company.

A recent fire near the datacenter that Trey Research uses raises the management team's awareness of the vulnerability of hosting all of the company's websites and data at any single location. The management team is concerned about protecting its data from loss as a result of a disaster.

Websites

Trey Research has a portfolio of 300 websites and associated background processes that are currently hosted in a third-party datacenter. All of the websites are written in ASP.NET, and the background processes use Windows Services. The hosting environment costs Trey Research approximately S25 million in hosting and maintenance fees.

Infrastructure

Trey Research also has on-premises servers that run VMs to support line-of-business applications. The company wants to migrate the line-of-business applications to the cloud, one application at a time. The company is migrating most of its production VMs from an aging VMWare ESXi farm to a Hyper-V cluster that runs on Windows Server 2012.

Applications DistributionTracking

Trey Research has a web application named Distributiontracking. This application

constantly collects realtime data that tracks worldwide distribution points to customer retail sites. This data is available to customers at all times.

The company wants to ensure that the distribution tracking data is stored at a location that is geographically close to the customers who will be using the information. The system must continue running in the event of VM failures without corrupting data. The system is processor intensive and should be run in a multithreading environment.

HRApp

The company has a human resources (HR) application named HRApp that stores data in an on-premises SQL Server database. The database must have at least two copies, but data to support backups and business continuity must stay in Trey Research locations only. The data must remain on-premises and cannot be stored in the cloud.

HRApp was written by a third party, and the code cannot be modified. The human resources data is used by all business offices, and each office requires access to the entire database. Users report that HRApp takes all night to generate the required payroll reports, and they would like to reduce this time.

MetricsTracking

Trey Research has an application named MetricsTracking that is used to track analytics for the DistributionTracking web application. The data MetricsTracking collects is not customer-facing. Data is stored on an on-premises SQL Server database, but this data should be moved to the cloud. Employees at other locations access this data by using a remote desktop connection to connect to the application, but latency issues degrade the functionality.

Trey Research wants a solution that allows remote employees to access metrics data without using a remote desktop connection. MetricsTracking was written in-house, and the development team is available to make modifications to the application if necessary. However, the company wants to continue to use SQL Server for MetricsTracking.

Business Requirements Business Continuity

You have the following requirements:

✑ Move all customer-facing data to the cloud.

✑ Web servers should be backed up to geographically separate locations,

✑ If one website becomes unavailable, customers should automatically be routed to websites that are still operational.

✑ Data must be available regardless of the operational status of any particular website.

✑ The HRApp system must remain on-premises and must be backed up.

✑ The MetricsTracking data must be replicated so that it is locally available to all Trey Research offices.

Auditing and Security

You have the following requirements:

✑ Both internal and external consumers should be able to access research results.

✑ Internal users should be able to access data by using their existing company credentials without requiring multiple logins.

✑ Consumers should be able to access the service by using their Microsoft credentials.

✑ Applications written to access the data must be authenticated.

✑ Access and activity must be monitored and audited.

✑ Ensure the security and integrity of the data collected from the worldwide distribution points for the distribution tracking application.

Storage and Processing

You have the following requirements:

✑ Provide real-time analysis of distribution tracking data by geographic location.

✑ Collect and store large datasets in real-time data for customer use.

✑ Locate the distribution tracking data as close to the central office as possible to improve bandwidth.

✑ Co-locate the distribution tracking data as close to the customer as possible based on the customer's location.

✑ Distribution tracking data must be stored in the JSON format and indexed by

metadata that is stored in a SQL Server database.

✑ Data in the cloud must be stored in geographically separate locations, but kept with the same political boundaries.

Technical Requirements Migration

You have the following requirements:

✑ Deploy all websites to Azure.

✑ Replace on-premises and third-party physical server clusters with cloud-based solutions.

✑ Optimize the speed for retrieving exiting JSON objects that contain the distribution tracking data.

✑ Recommend strategies for partitioning data for load balancing.

Auditing and Security

You have the following requirements:

✑ Use Active Directory for internal and external authentication.

✑ Use OAuth for application authentication.

Business Continuity

You have the following requirements:

✑ Data must be backed up to separate geographic locations.

✑ Web servers must run concurrent versions of all websites in distinct geographic locations.

✑ Use Azure to back up the on-premises MetricsTracking data.

✑ Use Azure virtual machines as a recovery platform for MetricsTracking and HRApp.

✑ Ensure that there is at least one additional on-premises recovery environment for the HRApp.

Thanks for reading the newest 70-534 exam dumps! We recommend you to try the PREMIUM 2passeasy 70-534 dumps in VCE and PDF here: https://www.2passeasy.com/dumps/70-534/ (232 Q&As Dumps)